Tech

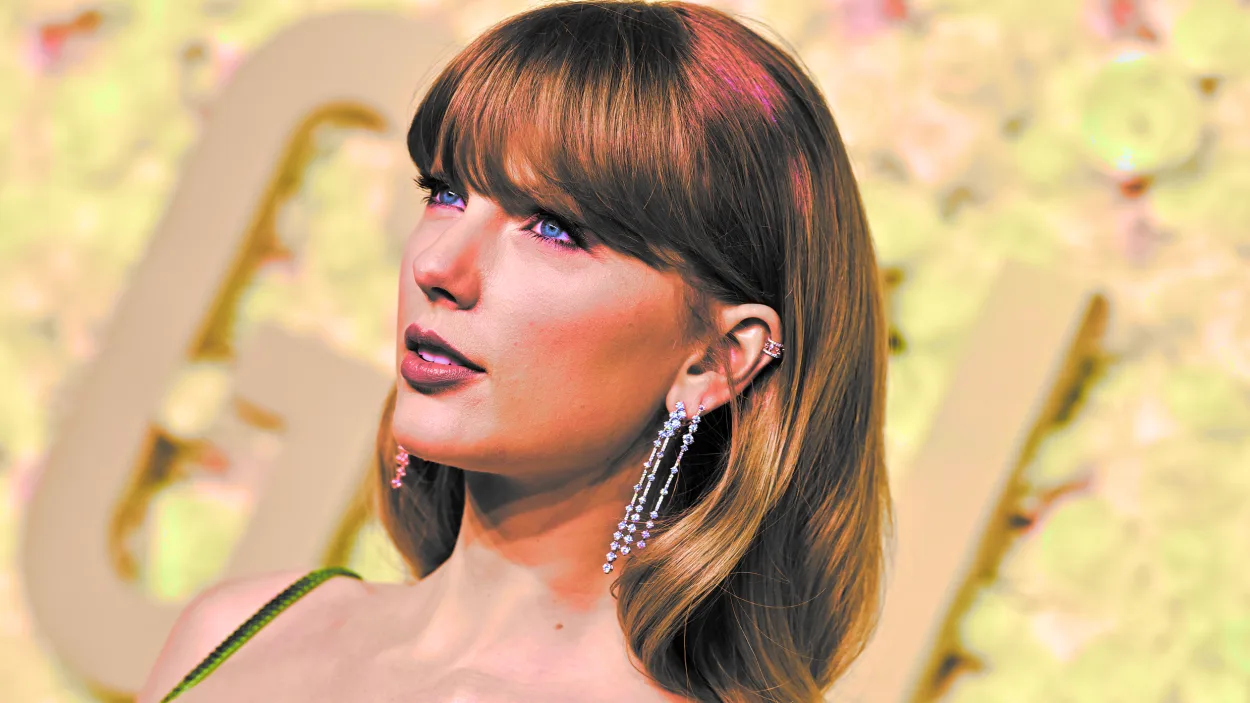

Taylor Swift’s AI-Generated Nude Images Went Viral on X

(CTN News) – Nonconsensual sexually explicit deepfakes of Taylor Swift became viral on X on Wednesday, garnering over 27 million views and more than 260,000 likes in just 19 hours until the account that posted the images was suspended.

Deepfakes of Taylor Swift in naked and sexual situations continue to circulate on X, including reposts of popular deepfake photos. Such images can be constructed using AI technologies that generate new, synthetic images or by taking a real image and “undressing” it with AI algorithms.

The photographs’ origin is unknown, but a watermark indicates that they come from a years-old website infamous for releasing phoney nude celebrity images. The website has a section labelled “AI deepfake.”

Reality Defender, an AI detection software startup, evaluated the photographs and concluded they were most likely made using AI technology.

The photos’ widespread distribution over nearly a day highlights the increasingly frightening spread of AI-generated content and misinformation online.

Despite the issue’s intensification in recent months, tech platforms like X, which have created their generative AI technologies, have yet to install or discuss techniques to detect generative AI content that violates their policies.

Taylor Swift’s most viewed and shared deepfakes depict her naked in a football stadium. Swift has endured misogynistic criticism for supporting her partner, Kansas City Chiefs player Travis Kelce, by attending NFL games. Taylor Swift addressed the controversy in an interview with Time, saying, “I have no idea if I’m being shown too much and pissing off a few dads, Brads, and Chads.”

X did not reply quickly to a request for comment. Taylor Swift’s agent declined to comment on the record.

X has blocked potentially harmful media but has yet to handle sexually explicit deepfakes on the network. In early January, a 17-year-old Marvel star spoke out about discovering sexually explicit deepfakes of herself on X and being unable to erase them.

As of Thursday, NBC News has found such content on X. In June 2023, NBC News discovered nonconsensual sexually explicit deepfakes of TikTok stars spreading on the site. After X was approached for comment, only a portion of the content was removed.

Taylor Swift supporters claim that a mass-reporting campaign resulted in removing the artist’s most famous photographs rather than Swift and X themselves.

Following the trending of “Taylor Swift AI” on X, Swift’s admirers flooded the hashtag with complimentary tweets about her, according to a Blackbird analysis.

AI is a startup that uses AI technology to safeguard organisations from narrative-driven online attacks. “Protect Taylor Swift” also became popular on Thursday.

One of the people who claimed credit for the reporting effort provided NBC News with two screenshots of notices she received from X indicating that her reports resulted in the suspension of two accounts that posted Taylor Swift deepfakes for violating X’s “abusive behaviour” guideline.

The woman who submitted the screenshots, who exchanged direct messages on the condition of anonymity, said she is increasingly concerned about the current effects of AI deepfake technology on ordinary women and girls.

“They don’t take our suffering seriously, so now it’s up to us to mass report these people and have them suspended,” the lady who reported the Taylor Swift deepfakes stated in a direct message.

Numerous high school-aged girls in the US have reported being victims of deepfakes. There is currently no federal law in the United States that governs the creation and distribution of nonconsensual sexually explicit deepfakes.

Rep. Joe Morelle, D.-N.Y., submitted a bill in May 2023 to criminalise nonconsensual sexually explicit deepfakes at the federal level. He commented on X about the Taylor Swift deepfakes, stating, “Yet another example of the destruction deepfakes cause.” Despite support from a renowned teen deepfake victim, the bill has not progressed since its introduction in early January.

Carrie Goldberg, a lawyer who has represented victims of deepfakes and other forms of nonconsensual sexually explicit material for over a decade, claims that even tech companies and platforms with anti-deepfake policies fail to prevent them from being posted online and spreading quickly through their services.

“Most human beings don’t have millions of fans who will go to bat for them if they’ve been victimised,” Goldberg said on CNN. “Even those platforms that do have deep fake policies, they’re not great at enforcing them, or especially if the content has spread very quickly, it becomes the typical whack-a-mole scenario.”