Through machine learning algorithms we can solve many complex problems. Machine learning makes predictions based on a data revolution. One such algorithm that has gained significant popularity is the Random Forest. Random Forest is a classification algorithm. It is widely recognized for its robustness and versatility. In this article, we will learn about the essence of random forest classification using the Scikit-Learn library. Also, uncovering the inner workings of Random Forest. Let’s find out why machine learning is such a powerful tool.

What is Random Forest Classification?

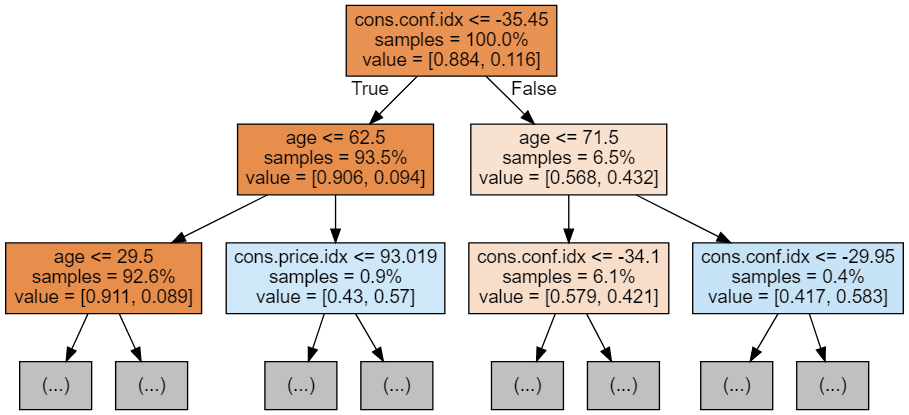

Random Forest is an ensemble learning technique that combines multiple individual models. Also, usually decision trees, to create a more accurate and robust model. Each decision tree is trained on a different subset of the data. And it makes predictions independently. The final prediction is determined by aggregating the predictions of all the individual trees. That is usually through majority voting for classification tasks.

The “random” in Random Forest comes from the two key sources of randomness in the algorithm:

- Random Sampling

- Random Feature Selection

Random Sampling: Each tree in the forest is trained on a random subset of the data, chosen through a process called bootstrapping. This ensures that each tree has a slightly different perspective on the data.

Random Feature Selection: At each node of the decision tree, instead of considering all features to split on, a random subset of features is considered. This introduces diversity among the trees and prevents one dominant feature from overshadowing others.

Why Use Random Forest?

Reduced Overfitting: By creating an ensemble of diverse decision trees, Random Forest reduces overfitting, which can be common with individual decision trees.

High Accuracy: The combination of multiple trees’ predictions often leads to higher accuracy compared to using a single decision tree.

Implicit Feature Selection: The algorithm’s random feature selection process acts as a form of feature selection, filtering out noisy or irrelevant features.

Handles Missing Values: Random Forest can handle missing values effectively, maintaining its predictive power even when data is incomplete.

Using Scikit-Learn for Random Forest Classification

Scikit-Learn, a popular Python library for machine learning, provides a simple and efficient implementation of the Random Forest classifier. Here’s a step-by-step guide on how to use it:

Step 1

Import Libraries: First, make sure you have Scikit-Learn installed. Import the necessary libraries

From sklearn.model_selection import train_test_split

From sklearn.ensemble import RandomForestClassifier

From sklearn.metrics import accuracy_score

Load your dataset and prepare the features (X) and target (y) variables.

Step 2

Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Step 3

Initialize the Random Forest Classifier: Create an instance of the Random Forest classifier and specify the number of trees (n_estimators) and other hyperparameters:

Clf = RandomForestClassifier(n_estimators=100, random_state=42)

Step 4

Train the Model: Train the classifier on the training data.

Clf.fit(X_train, y_train)

Step 5

Make Predictions: Use the trained model to make predictions on the test data.

Y_pred = clf.predict(X_test)

Step 6

Evaluate the Model: Evaluate the model’s performance using appropriate metrics such as accuracy.

Accuracy = accuracy_score(y_test, y_pred)

Print(“Accuracy:”, accuracy)

Is Random Forest Classification easy?

Of course! Random forest classification is generally considered relatively simple compared to some other machine learning algorithms. It is an ensemble method that combines multiple decision trees to make predictions. Making it more robust and less prone to overfitting. It does not require complex mathematical understanding. There are built-in methods to handle any missing values. Also, offers to evaluate the importance of all features. There are some hyperparameters to consider though. They are generally less sensitive than other algorithms. However, there are still factors such as determining the number of trees and some level of data preprocessing for optimal performance.

Last words

Random Forest classification is a powerful technique that harnesses the strength of multiple decision trees to provide accurate predictions while mitigating overfitting. With Scikit-Learn, implementing Random Forest becomes a straightforward task, allowing you to leverage its benefits for various classification tasks. Whether you’re dealing with medical diagnoses, financial predictions, or any other data-driven challenge. Random Forest could be your reliable ally in the world of machine learning.

⚠ Article Disclaimer

The above article is sponsored content any opinions expressed in this article are those of the author and not necessarily reflect the views of CTN News