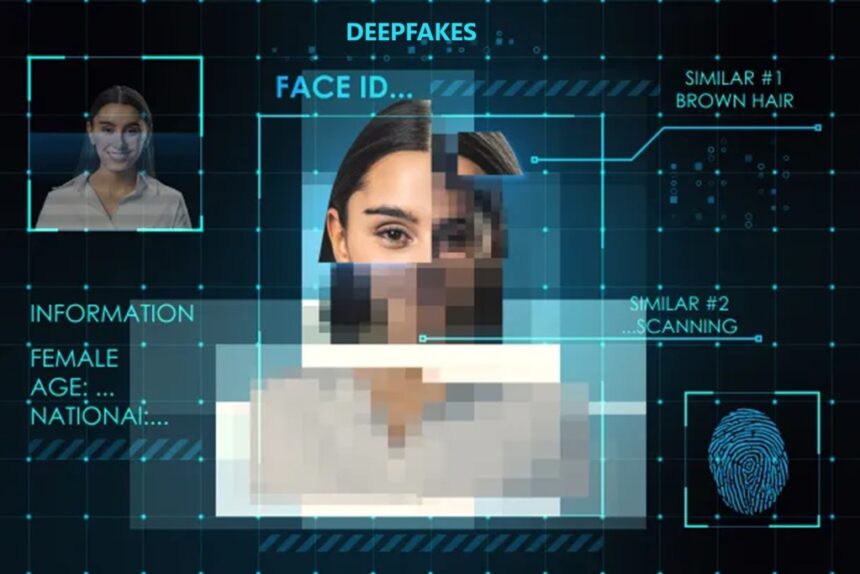

If you have ever seen a video of a politician saying something shocking, or heard a phone call that sounded exactly like a loved one asking for money, you might have wondered if it was real. That doubt is at the heart of deepfakes.

Deepfakes use powerful AI to copy faces, voices, and even whole videos. The result can look and sound real, even when the events never happened. That can be fun in a meme, but dangerous when money, reputation, or democracy are on the line.

This guide walks you through what deepfakes are, how the AI behind them works in simple terms, real examples of harm, how to spot them, and what is being done to fight back. The aim is not to cause panic, but to help you feel more informed and prepared.

What Are Deepfakes and Why Do They Matter?

Deepfakes are AI-generated fake images, videos, or audio that look and sound like the real thing. The word comes from “deep” (as in deep learning, a type of AI) and “fake”.

In the past, you needed a whole film team to fake a realistic scene. Now, a person with a decent laptop and free software can copy your face into a video or clone your voice in an afternoon. That shift is why deepfakes matter so much.

You might see deepfakes used in comedy, memes, or film. But the same tools also help scammers, bullies, and political tricksters. This article focuses on that darker side and what it means for trust in daily life.

Simple definition: Deepfakes in everyday language

Here is a simple way to think about deepfakes:

A deepfake is a fake video, photo, or sound clip made by AI that pretends to be a real person.

For example, someone could:

- Put a celebrity’s face on a different body in a film scene.

- Copy a manager’s voice and call a worker to ask for an urgent money transfer.

Deepfakes are not just a problem for famous people. Anyone with photos or videos online can be a target.

Different types of deepfakes: Video, images, and voice

Deepfake tricks show up in several forms:

- Face swap videos: Your face is pasted onto someone else’s body in a clip.

- Fake photos: An AI-made image puts you in a place or scene where you never were.

- AI voice clones: A short voice sample is enough to copy your tone and accent.

- Lip-sync clips: Your mouth is made to move in time with new words.

A common scam example is a fake video call from a “relative” who says they have an emergency and need money. The face and voice seem right, but both are generated by AI.

Good, bad, and grey-area uses of deepfake technology

Not every use of deepfake tools is bad.

- Helpful uses: Film studios use them for safer stunts or to “de-age” actors. Voice tools can help people who have lost their voice speak again. Dubbing tools can let actors’ lips match new languages.

- Harmful uses: Scams, blackmail, political lies, and non-consensual sexual images.

- Grey areas: Satire, parody, and memes that make fun of public figures.

The core issue is not the technology itself but how people choose to use it, and how easy it has become for anyone to misuse it.

How Deepfakes Actually Work: The AI Tech Explained Simply

You do not need to be a programmer to grasp the basics of how deepfakes work. Think of the AI like a student that studies thousands of examples until it can mimic a person almost perfectly.

The more data it gets, the better its fakes will be.

From real photos and audio to fake media: How training works

To build a deepfake, an AI model is trained on real material:

- For faces, it ingests many photos and video frames of one person.

- For voices, it listens to lots of short audio clips.

It is like a student staring at thousands of drawings of your face until they can sketch you from memory. At first, the sketch is rough. With more practice, the drawing becomes closer to a photo.

If the AI gets only a few low-quality clips, the deepfake will look dodgy. If it gets years of social media photos and interviews, the results can be very convincing.

GANs explained: Two AIs competing to fool each other

One popular method behind deepfakes uses GANs, or generative adversarial networks.

Picture two AI “artists”:

- The generator makes fake images or frames.

- The discriminator judges them and says “real” or “fake”.

They play a game. The generator keeps improving to trick the discriminator. The discriminator keeps getting better at spotting fakes. Over time, the generator learns to produce images that even the discriminator struggles to tell apart from the real thing.

That back-and-forth training is a big reason deepfakes now look so realistic.

Other AI tools behind deepfakes: Autoencoders, diffusion, and voice cloningOther tools are working behind the scenes, too:

- Autoencoders learn to compress and rebuild faces, so they can copy expressions from one person to another, like tracing someone’s smile onto a different face.

- Diffusion models start with noisy, messy images and “clean” them into sharp, realistic faces, like polishing a blurry sketch into a detailed portrait.

- Voice cloning models listen to audio and break it into patterns, so they can rebuild your voice to say any new sentence.

Together, these tools let people not just fake a face, but move it, light it, and give it sound.

Why deepfakes are getting better and easier to make

Several trends have pushed deepfakes forward:

- Computers are faster and cheaper.

- Social platforms are full of photos, videos, and speech that can be scraped as training data.

- Easy-to-use apps and web tools hide the complex code behind simple buttons.

You no longer need to be a specialist. Many deepfake kits come with step-by-step guides. That ease of use is part of the risk.

Real Deepfake Threats: From Politics and Scams to Everyday Trust

Deepfakes are no longer just fun experiments. They are used in real scams, bullying, and political tricks. Recent reports show sharp growth in advanced fraud attempts that rely on AI-generated media.

Deepfakes in politics and news: A new weapon for disinformation

Imagine a video that seems to show a leader:

- Admitting to a crime.

- Insulting a group.

- Announcing a sudden policy or attack.

If that video spreads quickly on social media before journalists can check it, people may react with anger, fear, or hate. Even if experts later prove it was fake, some viewers will keep believing it.

That lasting doubt harms trust in news and in democratic debate. It also gives real wrongdoers an excuse to claim, “That video is just a deepfake,” even when it is not.

UNESCO has described this as a “crisis of knowing”, where people struggle to tell what is real, in a detailed article on deepfakes and the crisis of knowing.

Scams and fraud: When deepfakes steal money and identities

Scammers are quick to adopt new tricks. Deepfakes give them powerful tools:

- A cloned voice of a boss asking a worker to move the company funds.

- A fake video call from a “child” or “grandparent” in trouble.

- A fake job interview where the applicant is actually a deepfake using stolen documents.

There have already been cases where staff joined what looked like a normal video meeting and later found out several people on the call were AI-generated. Large sums of money were transferred as a result.

Groups such as the American Bankers Association and the FBI now publish advice on spotting these tricks, including a joint guide on how to avoid deepfake scams.

A simple rule helps: treat any urgent request for money or sensitive data with suspicion, even if the voice and face seem right. Always confirm through a second trusted channel.

Targeted attacks and bullying: Deepfakes as a tool for abuse

Deepfakes are also used for personal harm:

- Non-consensual sexual videos with someone’s face pasted in.

- Fake clips designed to embarrass a student, colleague, or ex-partner.

- Blackmail attempts that threaten to share fake content unless money is paid.

The emotional effect can be severe. Victims may feel ashamed, unsafe, or powerless, even when they know the video is fake. Young people and women are often hit hardest, but anyone can be a target.

Some countries now have laws against certain deepfake abuses, especially sexual ones, but removing content can still be slow and stressful.

The trust crisis: When we cannot believe our eyes and ears

Once deepfakes exist, even real evidence starts to feel shaky. This feeds what some experts call the “liar’s dividend”.

If a true recording shows a public figure doing something wrong, they can claim, “That is a deepfake.” Supporters might believe them. In a court case or news scandal, that doubt can be enough to muddy the waters.

As deepfakes spread, we risk a world where every clip is questioned, and nothing feels solid. That is why understanding and spotting them matters so much.

How to Spot Deepfakes and Protect Yourself Online

No single trick lets you spot every deepfake. Some fakes are now very polished. Still, some habits and clues can help you stay safer.

Think of it like checking bank notes. You look for signs it might be fake, then you check the source.

Simple signs that a video or audio might be a deepfake.

These clues do not prove something is fake, but they can be strong warnings:

- Eyes that blink strangely or not at all.

- Blurry edges where the face meets hair, glasses, or background.

- Lighting on the face that does not match the room.

- Lips that are slightly out of sync with the words.

- Odd, jerky head movements.

- A voice that sounds flat, slightly robotic, or “off” in emotion.

The better the deepfake, the fewer obvious glitches you will see. Your gut feeling still matters. If something feels “wrong”, slow down and check.

Check the source: Who posted it, and where did it first appear?

Deepfakes often appear first on small or unknown accounts, not on trusted news sites.

Ask yourself:

- Who posted this clip first?

- Is the account new, or does it have a history of sharing extreme content?

- Has any trusted news outlet or expert confirmed the story?

You can also:

- Search for the topic on reputable news sites.

- Use reverse image search on a key frame from the video to see where else it appears.

If no reliable source mentions a huge “scandal” or “leak”, treat the clip as unproven.

Use tools and fact-checks, but keep a healthy doubt

There are online tools that claim to detect deepfakes. They look for tiny patterns or “fingerprints” left by AI models, which humans cannot see.

Researchers also publish reviews of new detection methods, such as this study on AI algorithms for detecting deepfakes. These tools are improving, but they are not magic.

Treat automated checks and fact-checking sites as one more input, not the final answer. If a video makes you feel shocked, angry, or scared, pause before you share it.

Personal safety tips: What to do if you are targeted by a deepfake

If you think someone has made a harmful deepfake of you, try to stay calm and act quickly.

- Collect evidence: Save links, take screenshots, and record dates and times.

- Report it: Use the platform’s report tools for harassment or fake content.

- Seek help: Talk to trusted friends or family, and consider legal advice, especially for sexual or blackmail material.

- Contact authorities: In many places, police treat deepfake sexual abuse and serious blackmail as crimes.

- Do not pay: Paying blackmailers usually leads to more demands, not less harm.

You are not alone. Support from people you trust matters as much as the technical steps.

Fighting Back: Laws, Tech Defences, and the Future of Deepfakes

Deepfakes are not going away. But governments, companies, and researchers are working on ways to limit the damage.

The goal is not to ban every AI tool, but to set fair rules, build stronger defences, and help people think more carefully about what they see and share.

New detection tools and watermarks to prove what is real

Researchers are training AI to spot deepfakes by:

- Scanning for tiny inconsistencies in pixels or sound.

- Looking at how light reflects in the eyes.

- Checking background details that generators often get wrong.

At the same time, there is growing work on watermarks and provenance labels. These are like digital stamps that show when, where, and how a photo or video was made.

For example, a camera or editing app could sign each file with a secure mark. Platforms could then label content as “original” or “unknown source”. These systems are still being tested, and they will only work well if many companies adopt them.

Laws and platform rules are catching up with deepfake abuse

Lawmakers in several places are:

- Creating offences for malicious deepfakes used for fraud or sexual abuse.

- Introducing rules around election content and political adverts.

- Funding research into safer AI and detection.

Social media platforms now often have policies against non-consensual sexual deepfakes and harmful impersonation. They can remove content and suspend accounts, although enforcement is uneven and sometimes slow.

Laws tend to move more slowly than technology, but awareness among regulators is growing.

Why digital literacy and scepticism are your best defence

Even with the best tools and laws, your own habits still matter most.

A few simple habits go a long way:

- Be gently sceptical of dramatic videos and audio.

- Ask who benefits if you believe and share this.

- Look for confirmation from trusted, independent sources.

- Teach children and older relatives to be careful with money requests and shocking clips.

This is not about living in fear. It is about building a calm, thoughtful way of dealing with media, so deepfakes have less power to fool you.

Conclusion

Deepfakes are AI-generated fakes that copy faces, voices, and whole scenes so well that they can be hard to spot. The same tools that help film-makers or people who have lost their voices are also used for scams, political tricks, and personal abuse.

You have seen how the tech works in simple terms, from GANs that pit two AIs against each other to voice cloning that can copy someone after only a short sample. You have also seen the biggest risks: lost money, damaged reputations, shaken trust in the news, and a wider crisis of trust in what we see and hear.

The good news is that you are not helpless. By looking for signs of deepfakes, checking sources, using fact-checks wisely, and supporting fair rules and better tools, you can protect yourself and others. Share this guide with friends or family, talk about deepfakes openly, and help build a more careful, better-informed online world.