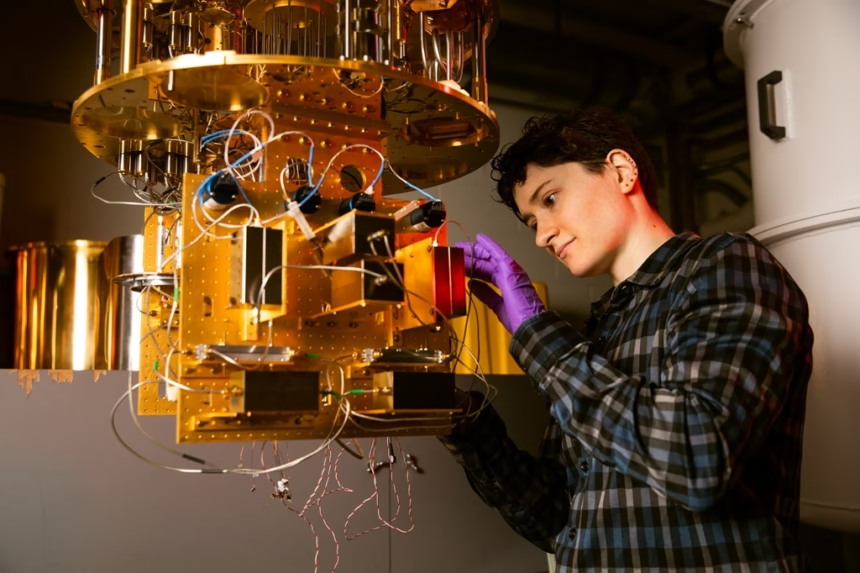

What if quantum computers finally started behaving the way theory promises? In 2025, that question moved from “maybe one day” to “we can see the path.” The year’s breakthroughs are about reliability, not showy stunts. That is why they matter.

Here is your clear guide to the biggest wins, who led them, and how they move the field forward. We will use plain words, no heavy maths, and a focus on what is useful. By the end, you will know what a qubit is, why error correction rules the narrative, what a logical qubit buys you, and why coherence time is the clock everyone watches.

A quick primer:

- Qubit: the quantum version of a bit that can be 0 and 1 at the same time.

- Error correction: methods that spot and fix mistakes caused by noise.

- Logical qubit: a more stable qubit formed from many physical qubits working together.

- Coherence time: how long a qubit keeps its state before noise ruins it.

Biggest Quantum Breakthroughs of 2025, Explained Simply

This year shifted from bigger chips to better chips. The key change is quality, not just quantity. Teams reported lower error rates, longer coherence, and real plans for fault tolerance. In practice, that means adding qubits starts to reduce errors, not increase them.

The headline moves:

- Google showed below-threshold performance on a 105-qubit superconducting chip called Willow.

- IBM set a public path to fault tolerance with its Quantum Starling plan, aiming for 200 logical qubits by 2029.

- Microsoft advanced topological qubits with Majorana 1 and, together with Atom Computing, entangled 24 logical qubits.

- QuEra shared techniques to slash error-correction overhead, which cuts cost and energy needs.

- NIST’s SQMS effort extended coherence to about 0.6 milliseconds, a major reliability boost.

Google’s Willow chip goes below the error threshold

Below threshold means the crossover point where making the system larger makes it more accurate. Before this, adding qubits made things worse, like trying to build a tower with wobbly bricks. Hit the threshold, and more bricks make the tower steadier.

Google’s Willow chip uses 105 superconducting qubits. The team showed that scaling up reduced the rate of logical errors, a key sign that fault tolerance is within reach. They also ran a complex benchmark in about five minutes. Estimates say a classical supercomputer would need far longer, on the order of trillions of years, to match it. That is not a party trick, it is a proof that scaling can work the way theory expects.

Why it matters: once you cross threshold, you can plan. Bigger machines should get more accurate. That flips the story from “limited demos” to “a roadmap that compounds”.

IBM’s Quantum Starling roadmap to fault tolerance

IBM laid out a step-by-step plan called Quantum Starling. The near-term goal is a fault-tolerant system by 2029 with around 200 logical qubits and roughly 100 million error-corrected operations. The longer plan reaches about 1,000 logical qubits in the early 2030s, with concepts for quantum supercomputers with about 100,000 qubits by 2033.

Why a public roadmap helps:

- Users can plan workloads, training, and hiring.

- Policymakers can budget for skills, standards, and infrastructure.

- Researchers and vendors can align on benchmarks, not vague promises.

IBM also continues to refine error-correcting codes and architectures that lower overhead. Fewer helper qubits per logical qubit means less energy, less cost, and simpler systems.

Microsoft’s Majorana 1 and Atom Computing hit new logical-qubit milestone

Topological, or Majorana, qubits are engineered to be naturally stable. Think of them as qubits with built-in shock absorbers. If stability holds at scale, they could need far less error correction than other types.

In 2025, Microsoft introduced Majorana 1 and, with Atom Computing, achieved entanglement of 24 logical qubits. That is a new bar for useful, error-managed building blocks. Larger sets of entangled logical qubits will power early workloads in chemistry, optimisation, and simulation. The direction is clear: grow stable, error-managed logical qubits first, then widen the application set.

QuEra cuts error-correction needs by up to 100 times

Error correction is not only hardware, it is also smart layouts and code. QuEra reported methods that cut error-correction overhead dramatically, in some cases by as much as 100 times. Less overhead means fewer total qubits for the same job, shorter runtimes, lower energy, and lower operating cost.

A quick example: if a task needed a million helper qubits before, a 100x cut would bring it near ten thousand. That is the kind of change that turns pilot ideas into practical plans.

Reliability Leaps: Lower Error Rates and Longer Coherence in 2025

Reliability is the story of the year. You can imagine a quantum circuit like a long relay race. If even a tiny error creeps in at each handoff, the team loses. The only way to win is to cut errors per step and finish the race before the baton drops.

Record-low error rates reach about 0.000015% per operation

An error rate per gate tells you how often a single operation goes wrong. Tiny improvements stack up when millions or billions of gates are involved. Rates reported around 0.000015 percent per operation sound minuscule, but over a long circuit, they are the difference between a clean result and scrambled noise.

Picture a million-step recipe. If one step in a thousand fails, the dish is ruined. If one step in ten million fails, you have a real chance to finish.

Coherence times climb to about 0.6 ms

Coherence time is how long a qubit keeps its state. Longer is better, because circuits can finish before noise scrambles the result. In 2025, teams, including the NIST SQMS programme, reached about 0.6 milliseconds in superconducting devices. They got there by cleaning up loss sources in materials and interfaces, for example by fixing issues in niobium oxide layers and improving fabrication. More time on the clock means more steps per circuit, and that unlocks deeper algorithms.

Logical qubits 101: why they are the path to useful machines

A physical qubit is a single device. A logical qubit is a bundle of many physical qubits that act as one stable unit. The bundle spots and corrects errors as the computation runs. The trade-off is overhead, since you need many physical qubits to make one logical qubit. The payoff is stability. As base error rates fall and coherence rises, the number of physical qubits needed per logical qubit starts to drop. That is how the field scales to useful sizes.

What “below threshold” scaling means for the next five years

Below threshold is where error correction starts to win. Add more qubits, and total error falls. Stay above it, and noise keeps building. Google’s Willow gave the field a working example of below-threshold scaling. Expect steady progress over the next five years. Think better codes, better materials, and a climbing count of stable logical qubits, not a sudden jump to science fiction machines.

Real-World Impact: Quantum + AI, Sensing, and Secure Networks

In 2025, the focus widened from lab demos to integrated workflows. Hybrid setups that mix quantum, classical compute, and AI are becoming the default path to value.

Quantum AI synergy grows fast

AI helps design circuits, tune pulses, and predict noise patterns. Quantum hardware can in turn help with optimisation tasks that feed AI systems, such as model scheduling or resource allocation.

Strong activity includes:

- Hybrid workflows where classical solvers hand subproblems to quantum optimisers.

- Generative models that propose circuit layouts or error-correcting tweaks.

- AI-driven error mitigation that adapts on the fly.

Simple example: a logistics team can use a hybrid solver to route vehicles with time windows and capacity constraints. The classical system sets the frame, the quantum step refines the hardest routes.

For broader context on AI’s pace and compute trends, see these game-changing AI updates shaping tech in 2025.

Quantum sensing steps toward field use

Quantum sensors read tiny changes in fields or forces. They can spot subtle signals that standard tools miss. In health, that could mean earlier detection in brain activity or heart signals. In geophysics, that could mean mapping underground features for resources or safety checks. Navigation without GPS is another near-term use, for example in tunnels or urban canyons.

Sensing may reach everyday impact sooner than general-purpose quantum computing, because the devices can be compact and task-focused.

Quantum communication and QKD trials expand

Quantum key distribution, or QKD, is a way to share encryption keys using single photons. If someone tries to eavesdrop, the act of looking changes the signal, and the parties can detect it. In 2025, more pilots ran with government support to trial ultra-secure links and prepare for post-quantum security. Coverage is still patchy and the gear is costly, but the learning curve is steep.

To stay ahead of risks and migration choices, read this guide on quantum computing risks to data security in 2025.

Early industry use cases you can follow now

Pilots are small, scoped tests that run on a timeline of weeks to months, often with limited datasets. They aim to prove feasibility, not deliver full production value.

Areas to watch:

- Finance: portfolio optimisation under constraints, risk sampling, limit setting.

- Logistics: vehicle routing, packing, and staff scheduling.

- Materials: small-molecule simulation and catalyst screening.

Vendors like IBM and Nvidia provide toolchains and simulators that help teams try these workflows without buying specialised hardware. The winning approach in 2025 is simple: pick a clear metric, run a pilot, measure the delta, and keep a log of error rates and runtimes.

Money, Policy, and What to Watch in 2026

Quantum is a long game. The right mix of steady funding, clear roadmaps, and sane buyer behaviour kept 2025 grounded.

Funding surge in 2025

Public and private money both scaled. The U.S. Department of Energy committed about 625 million dollars to quantum research centres, which supports materials work, devices, and software. Private investment reached roughly 1.25 billion dollars across the first three quarters. This split matters. Public funding backs the tough, slow fixes in physics and fabrication. Private funding pushes product timelines and early customer pilots.

Roadmaps at a glance: 2026 to 2033

The picture for the next eight years looks like this:

- IBM targets a fault-tolerant system by 2029 with about 200 logical qubits, stepping to roughly 1,000 logical qubits in the early 2030s.

- Google, Microsoft, and others are moving toward below-threshold operation and growing logical-qubit counts.

- Error-correction overhead is trending down due to better codes and layouts across the field.

How to read a roadmap with healthy scepticism:

- Look for reproducible, peer-reviewed error rates and coherence times.

- Ask how often benchmarks are repeated and under what noise model.

- Track logical, not just physical, qubit counts.

- Watch for progress on compiler and mapping tools, not only new chips.

Signs of real traction include lower logical error per cycle, longer sustained logical circuits, and stable, repeatable runs across devices.

Get started today without big spend

You can start learning and testing without buying a cryostat.

- Learn the basics: qubits, gates, circuits, and error channels.

- Use cloud access to small devices or high-fidelity simulators.

- Pick a toy problem in optimisation, like a small routing or knapsack instance.

- Write it once, then re-run it every quarter as hardware and tools improve.

Keep a notebook for each run:

- Device, qubit count, and reported error rates.

- Circuit depth, runtime, and cost.

- Result quality compared to your classical baseline.

Smart questions for vendors and partners

Put these on your checklist:

- What is your error model, and does it match real device noise?

- How many logical qubits can you run today, and with what code distance?

- What is your typical and worst-case coherence time?

- Which benchmarks do you pass, and how often do you repeat them?

- How will you cut error-correction overhead next year, and what is your proof plan?

Ask for evidence, not a demo that runs once on a sunny day.

Quick Reference: 2025 Highlights and What They Mean

| Area | 2025 highlight | Why it matters |

|---|---|---|

| Below-threshold operation | Google’s 105-qubit Willow shows scaling wins | Bigger devices can mean fewer logical errors |

| Fault-tolerance roadmap | IBM’s Starling to 200 logical qubits by 2029 | Clear targets for users and policymakers |

| Topological qubits | Microsoft Majorana 1 progress | Potentially less error correction needed |

| Logical-qubit scale | Entanglement of 24 logical qubits | Larger, useful building blocks for workloads |

| Overhead reduction | QuEra reports up to 100x cuts | Lower cost, energy, and time-to-solution |

| Coherence gains | About 0.6 ms in superconducting devices | Deeper circuits before noise hits |

| Funding | $625m DOE, ~$1.25b private in 2025 | Sustains the long arc to fault tolerance |

| Real-world pilots | Finance, logistics, materials | Practical steps with measurable outcomes |

Conclusion

2025 reset expectations. The field cut errors, stretched coherence, and showed early signs that fault tolerance is not just a theory slide. Willow’s below-threshold result, IBM’s public plan, Majorana progress, and overhead cuts all point the same way.

Three simple next steps:

- Pick one roadmap and follow quarterly updates.

- Try a cloud demo and re-run it every few months.

- Track a pilot in your industry and note concrete metrics.

The opening question was whether quantum would finally behave as promised. This year’s answer is cautious but clear: it is starting to. The pieces are lining up, and steady progress beats hype. If you are curious, now is the right time to get hands-on and stay close to the data.