Tech

Detecting ChatGPT Plagiarism – And Why It’s So Difficult To Do?

(CTN NEWS) – ChatGPT is one of the most popular chatbots available today. However, because of how potent and humanlike its responses are, academics, teachers, and editors all have to deal with increased plagiarism and cheating caused by AI.

Your outdated plagiarism detection systems might not be adequate to distinguish between genuine and bogus content.

In this essay, I briefly discuss this terrifying aspect of AI chatbots, examine a few techniques for online plagiarism detection, and examine how serious the situation has grown.

ChatGPT

Numerous Options For Detection

The most recent ChatGPT release from OpenAI in November 2022 catapulted chatbot expertise into the spotlight. It made it possible for the average person (or any professional) to produce intelligent, understandable essays or articles and resolve text-based mathematical issues.

The AI-created work can easily pass for a legitimate piece of writing to an uninformed or inexperienced reader, which is why students adore it, and teachers despise it.

The capacity of AI writing tools to employ natural language and grammar to create original, nearly individualized text even when the content was taken from a database, is a significant problem.

The race to defeat AI-based cheating has thus begun. Here are a few choices I discovered that are currently free.

OpenAI, the company that created ChatGPT, released the GPT-2 Output Detector to show off its chatbot text detection bot.

Users only need to enter text into a text box for Output Detector to analyze whether the text is likely to have been created by a human. The tool is simple to use.

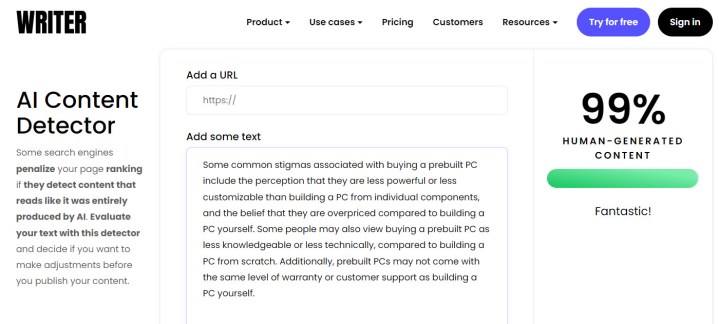

Writer AI Content Detector and Content at Scale are tools with tidy user interfaces like ZergoGPT. You can either manually input text or add a URL to scan the content (writer only).

A percentage score for the findings indicates the likelihood that humans create the content.

GPTZero is a self-made beta tool developed by Princeton University student Edward Zen and posted on Streamlit. The “algiarism” (AI-assisted plagiarism) model’s presentation of its findings sets it apart from the competition.

GPTZero

Perplexity and burstiness are the measures that GPTZero separates. Perplexity measures randomness inside a sentence, whereas burstiness assesses overall randomness across all sentences in a document.

Both measures are given numbers by the program; the lower the number, the more likely it is that a bot generated the text.

For fun, I added the Giant Language Model Test Room (GLTR), which scientists from the Harvard Natural Language Processing Group and the MIT-IBM Watson AI Lab created. Similar to GPTZero, it doesn’t clearly distinguish between “human” and “bot” in its final results.

Since bots are less likely to choose surprising terms, GLTR employs bots to detect content generated by bots. As a result, the results are displayed as a histogram with colors that compare human and AI texts.

The likelihood that the writing is from a human increase as the amount of uncertain text increases.

Making Them Undergo Testing

Demo

You might believe that given all these possibilities, AI detection is where we need to go. I wanted to use each of these technologies, though, to see how effective they were.

Therefore, I tested a few sample sentences I had written in response to queries I had also given to, in this case, ChatGPT.

My initial inquiry was straightforward: Why is purchasing a prebuilt PC frowned upon? Here are my responses and ChatGPT’s reply in comparison.

| My real writing | ChatGPT | |

| GPT-2 Output Detector | 1.18% fake | 36.57% fake |

| Writer AI | 100% human | 99% human |

| Content at Scale | 99% human | 73% human |

| GPTZero | 80 perplexity | 50 perplexity |

| GLTR | 12 of 66 words likely by human | 15 or 79 words likely by human |

As you can see, all but the first three apps could determine that my words were sincere. But ChatGPT’s response also managed to trick most of these detector apps.

For starters, it received a 99% human score on the Writer AI Content Detector app and was only identified as 36% fraudulent by the GPT-based detector.

The worst offender was GLTR, who asserted that ChatGPT’s words and mine both had an equal likelihood of being authored by humans.

But I decided to give it another go, and the reactions were much better this time. I requested from ChatGPT a synopsis of the gold particle anti-fogging study conducted at the Swiss Federal Institute of Technology.

The detector applications in this case, were far more successful in validating my own response and identifying ChatGPT.

| My real writing | ChatGPT | |

| GPT-2 Output Detector | 9.28% fake | 99.97% fake |

| Writer AI | 95% human | 2% human |

| Content at Scale | 92% human | 0% (Obviously AI) |

| GPTZero | 41 perplexity | 23 perplexity |

| GLTR | 15 of 79 words likely by human | 4 of 98 words likely by human |

In this response, the top three exams really displayed their strength. While GLTR still struggled to recognise my writing as human, at least this time, it did a fantastic job catching ChatGPT.

Conclusion

The results of each search make it clear that there are flaws in online plagiarism checkers. AI-based writing is a bit easier to spot in answers or more sophisticated writing, but it is much harder to determine in simpler answers.

But it’s not what I’d call reliable. It can be challenging for professors or editors to rely on these detecting technologies to discover cheaters when they mistakenly label some articles or essays as ChatGPT-generated.

However, they are also preparing for the advent of GPT-3, which boasts a substantially improved dataset and more advanced capabilities than GPT-2.

Developers are constantly optimizing accuracy and false positive rates (from which ChatGPT is trained).

At this stage, editors and educators will need to combine discretion and a little amount of human intuition with one (or more) of these AI detectors in order to recognize content produced by AIs.

Please refrain from using chatbots like Chatsonic, ChatGPT, Notion, or YouChat if you are a user who has or is inclined to do so in order to pass off your “job” as legitimate.

No matter how you look at it, reusing information produced by a bot (drawing on fixed sources in its database) is still plagiarism.

RELATED CTN NEWS:

Android Users Warned Over Malicious Apps in Thailand